Keyword [ResNeXt]

Xie S, Girshick R, Dollár P, et al. Aggregated residual transformations for deep neural networks[C]//Computer Vision and Pattern Recognition (CVPR), 2017 IEEE Conference on. IEEE, 2017: 5987-5995.

1. Overview

1.1. Motivation

- transition from feature engineering to network engineering

- human effort has been shifted to designing better network architecture for learning representation

- important strategy of Inception model is split-transform-merge

In this paper, it proposed ResNeXt Network

- aggregate a set of transformations with the same topology

- homogeneous multi-branch architecture

- increase cardinality (the size of the set of transformation) is more effective than going deeper or wider

1.2. Related Work

1.2.1. Multi-branch Convolutional Network

- Inception. multi-branch

- ResNet. two-branch

1.2.2. Group Convolution

- channel-wise convolution

1.2.3. Compressing Convolutional Network

- decomposition. at spatial or channel

1.2.4. Ensembling

2. Method

2.1. Simple Neurons

Inner Product. can be recast as a combination of splitting, transforming and aggregating.

- D. the number of channel

- x=[x_1, x_2, …, x_D]

2.2. Aggregated Transformations

- Network-in-Neuron. replace the elementary transformation (wx) with a more generic function

All T_i have the same topology.

- T. arbitrary function; projects x into low-dimension embedding and then transforms it

- C. the size of the set of transformations

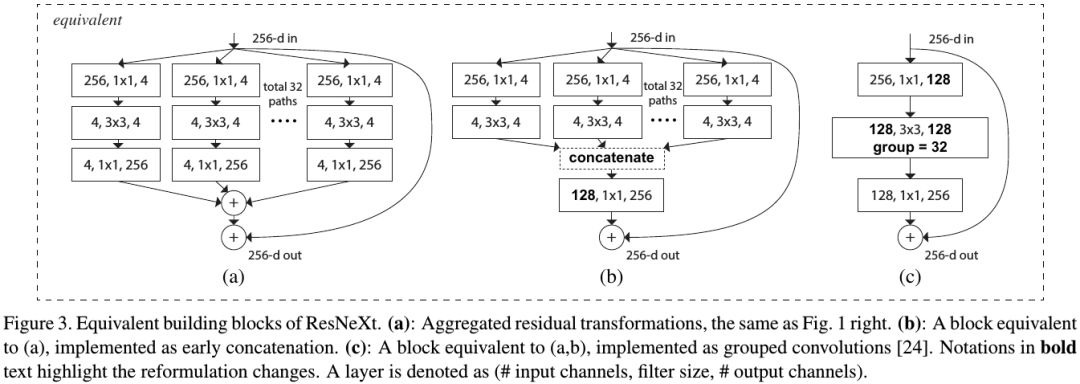

2.3. Relation to Grouped Convolutions

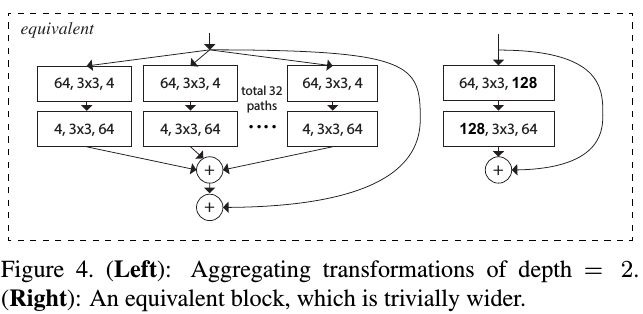

2.4. Depth ≥ 3

- The block depth must ≥ 3.

2.5. Capacity

- Left. 25664 + 336464 + 64*256 ≈ 70k parameters and proportional FLOPs

- Right. C(256d + 33dd + d256) ≈ 70k, when C=32, d=4

3. Experiments

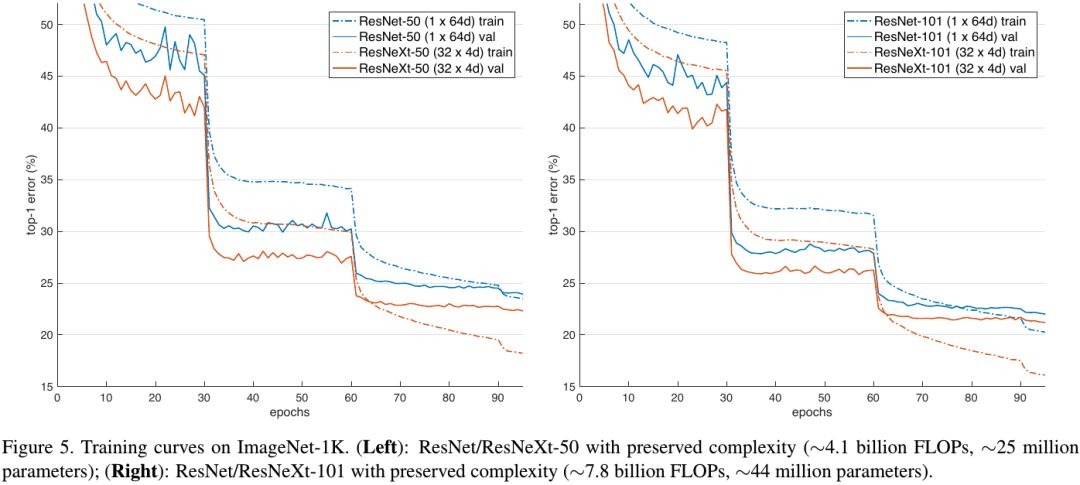

3.1. Cardinality vs Width

- with complexity preserved, increasing cardinality at the price of reducing width starts to show saturating accuracy

- more training data will enlarge the gap of validation error, shown in ImageNet-5K set

- the saturation is caused by the complexity of dataset, not the capacity of models

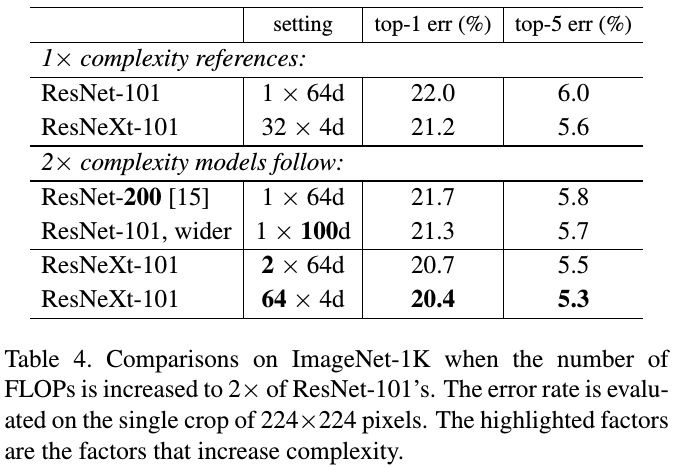

3.2. Cardinality vs Deeper/Wider

- incrase cardinality better

3.3. w/o Residual

3.4. Comparison

3.5. Detection